Rescaling, rotating and translating a model so that it fits a similar one using meshlab

So here we are, we have shitty pictures, 123dCatch made a good mesh, photoscan made a shitty one, but we want photoscan’s texture.

We will need to scale and orient the 123dCatch model so that it has the exact same ones that the photoscan model.

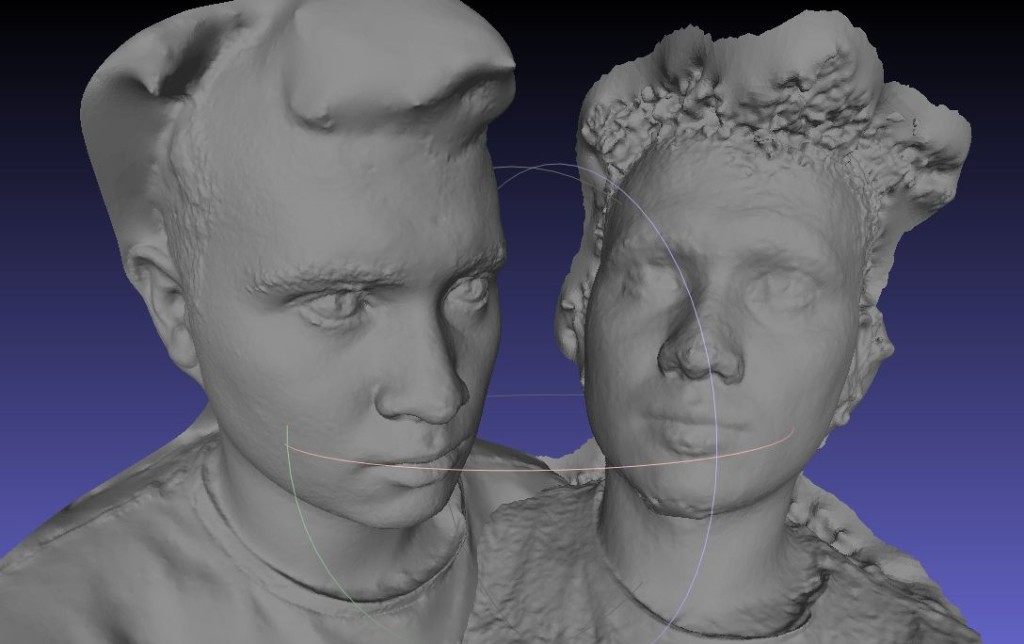

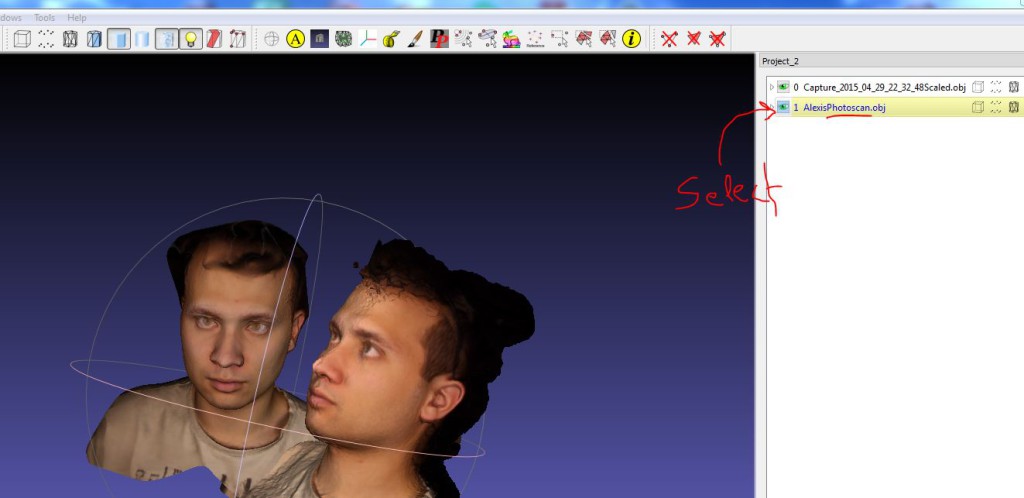

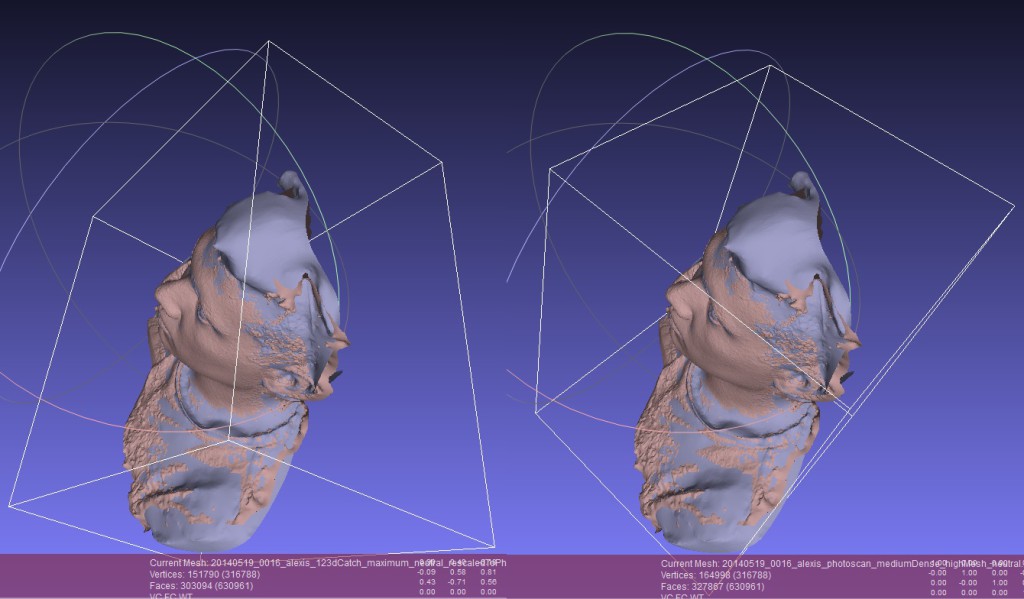

left : 123dCatch model, right : Photoscan model

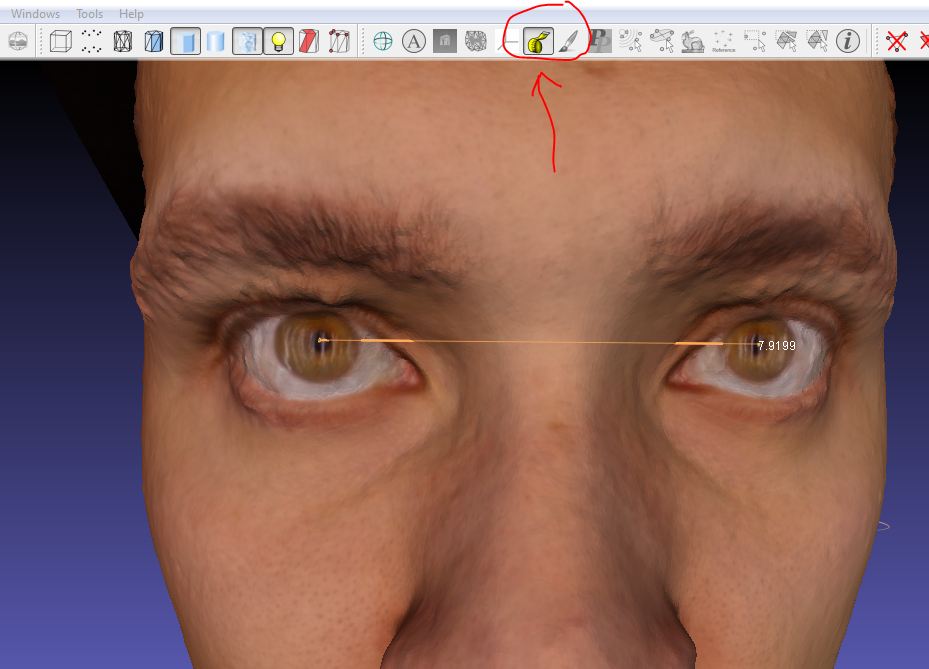

First step, scaling, open the photoscan mesh and measure the distance betwen each pupil

Do the same thing for the 123dCatch model.

Scaling is done by applying a coefficient, so we have

123dCatchModel * coefficient = photoscanModel

coefficient = photoscanModel/123dCatchModel

coef = 7.86/0.69 = 0.0877

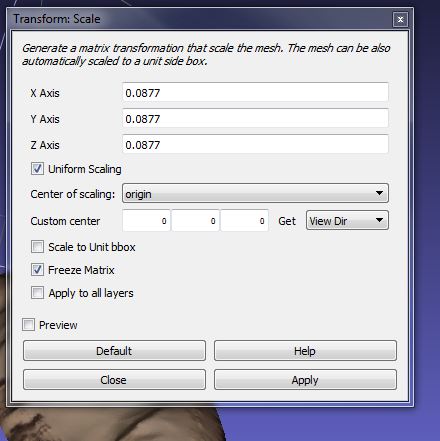

Using filter>Normals, Vurvatures and Orientation>Transform: Scale, input your coefficient and press apply

Export your rescaled OBJ, we will work with it.

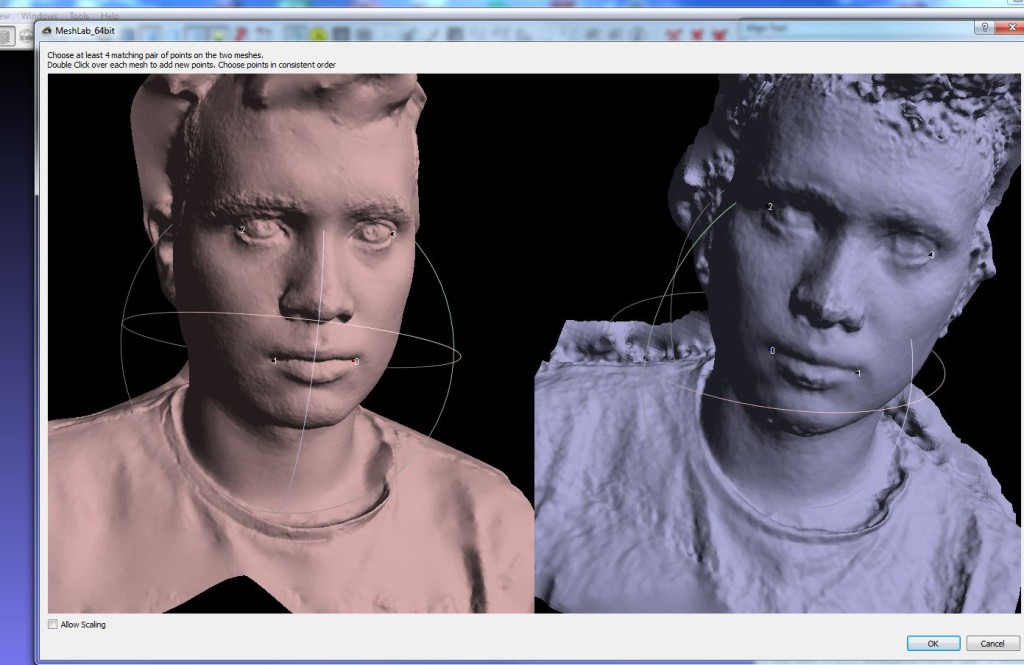

Open a new meshlab, use :

file>import to import the photoscan model

then use file>import to open the scaled 123dCatch model

You now have one project with 2 models, select the photoscan model on the layer window

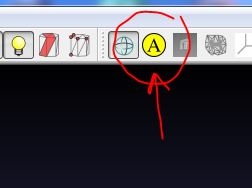

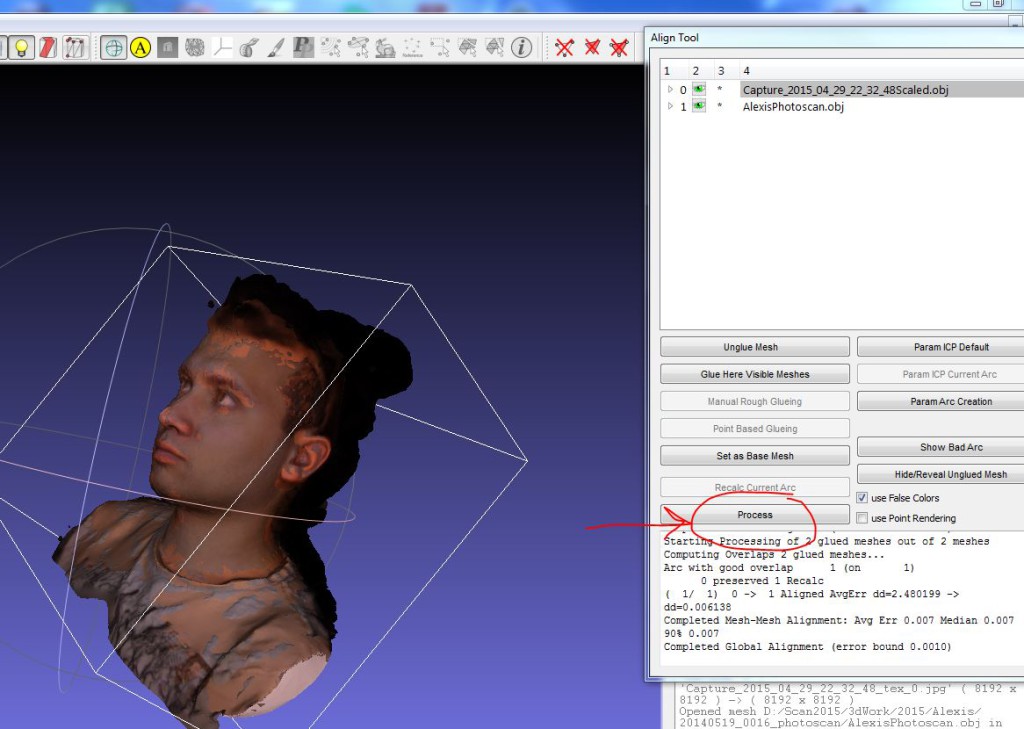

clic on the align tool

while being sure that the photoscan model is selected clic on glue model here, this will freeze your photoscan model and ensure that the 123dCatch model is the one being moved

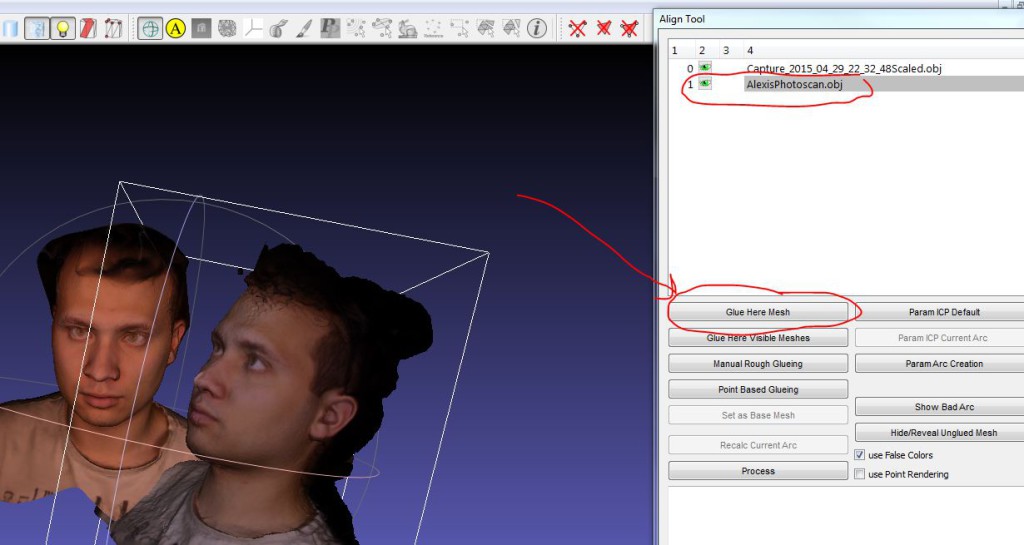

select the 123dCatch model in the layer window and clic on point base gluing

this open a new windows, select 4 point on each model (at the same position of course.

Texture are not displayed and you cannot strafe, so it’s kind of tricky, however you do not need to be very precise

Double clic to mark a point, ctrl+double clic to remove a point press ok when everything is ok

Clic for full resolution and see the 4 points

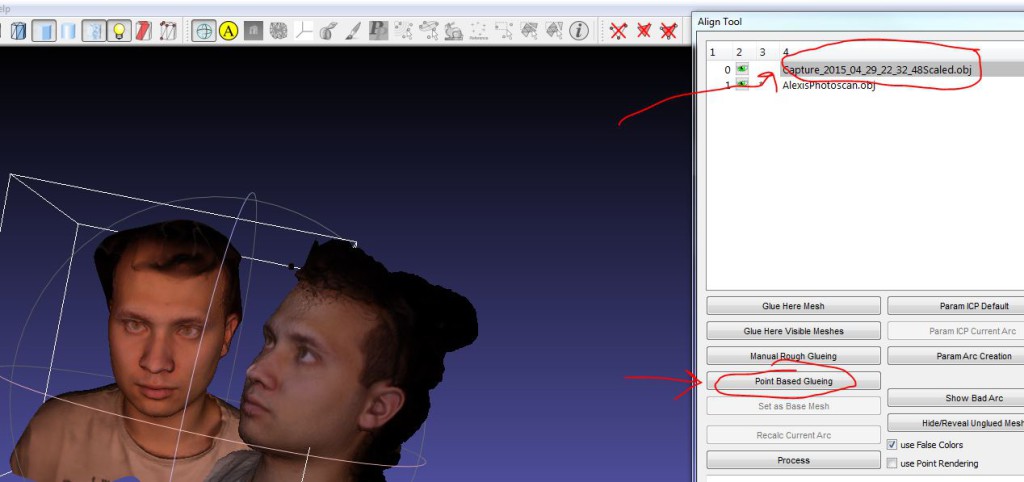

then clic on process and the 123dCatch model should be fitted at the photoscan model place.

Note that it only does translation and rotation, that is why we scaled the model so that they have the same size

Note that clicking the process button several times improves the fitting (I clicked 12 times)

Now close the align window, delete the photoscan model from the layer window, export you fitted 123dCatch model as OBJ and you can import it in the photoscan chunk from which you got the photoscan model

So now the model are aligned, but as you can see the 2 box are not the same, thus when importing in photoscan it won’t be oriented the same way, did not figured out yet how to do it

photos from which the model were generated link

123dCatch model link

photoscan model link

123dCatch model fitted to photoscan link

meshlab V133 64bits link

Joao

22 juillet 2015 - 17 h 20 min

As the last step, you should freeze the roto-translation matrix computed during the alignment phase. For that, simply right-click over the moving mesh layer and select « Freeze Current Matrix ». Then you can save your model and everything will be aligned when you load things up again.

Cheers!